Nesta’s Centre for Collective Intelligence has developed a new approach for involving the public in assessing AI tools for public services. Our latest pilot asked the public how they felt about Magic Notes, an AI tool that supports social workers with note-taking.

In January 2025, the UK Government published the ambitious AI Opportunities Action Plan, which described the potential for transforming public sector services through widespread integration of AI tools. At the time, Prime Minister Keir Starmer highlighted a number of promising use cases, including using AI to reduce the time spent on paperwork in social care.

The optimism around using AI to support the administrative tasks of social workers is unsurprising. Councils already spend around 40% of their budgets on social care and with each year, demand is growing. At the same time, social workers report high levels of burnout and stress. But ensuring responsible adoption of AI into a public service like social care is not straightforward. The stakes are high. Mistakes have the potential to impact hundreds of thousands of people, including some of the most vulnerable. The UK public are sceptical – when asked about whether they trust the government to use AI for tasks in different public service areas, social care and defence are the lowest ranked. Our own polling shows that three-quarters of UK adults (77%) think it’s important or essential for the public to be consulted before an AI tool is rolled out in social care [1].

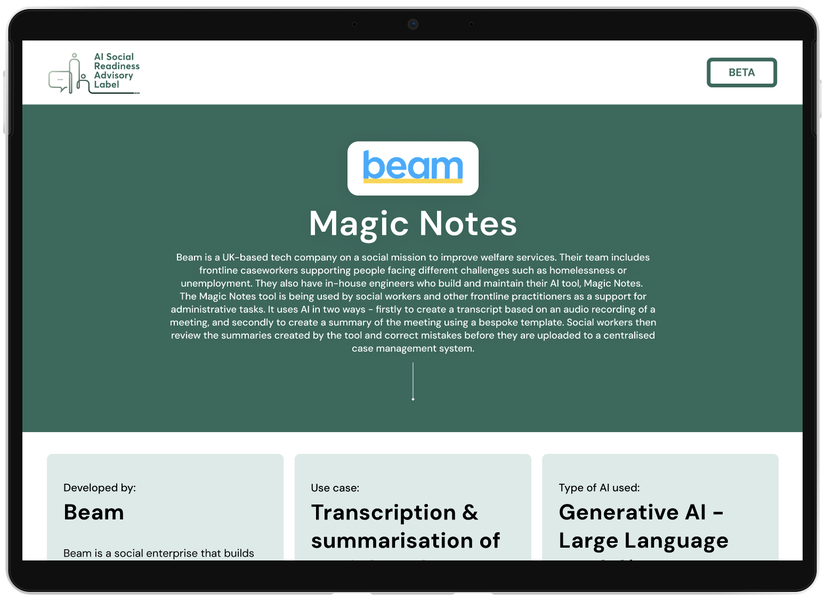

This is why our second AI Social Readiness pilot focused on Magic Notes, a tool developed by the UK-based technology company, Beam. It supports social workers with administrative tasks, using AI in two ways: 1) to create a transcript based on an audio recording of a meeting, and 2) to create a summary of the meeting using a bespoke “template”. Social workers review and correct summaries before they are uploaded to a centralised case management system. The tool is currently being piloted by more than 100 councils in the UK.

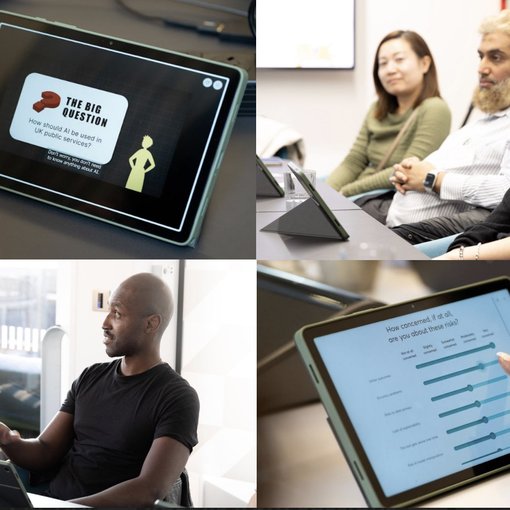

During September and October, we ran 18 deliberative sessions around the country with 137 members of the public and social care service users to ask what they thought about the Magic Notes. We dug into how they felt about the tool’s potential benefits and risks on balance, and measured if they thought the use of the tool would benefit the social care process. We also asked what safeguards would need to be in place for them to feel comfortable with the use of this tool by local authorities.

The resulting AI Advisory Label for the Magic Notes tool summarises the key findings from deliberations and provides clear actions for local authorities planning to use the tool about how to implement the tool responsibly, in line with public trust.

AI Social Readiness Advisory Label | CCID

Headlines: what did the public think about Magic Notes?

- Most people felt positive about local authorities using the Magic Notes tool. 83% of the general public and 75% of social care service users felt positive about social workers using 'Magic Notes' to improve the social care process.

- People saw that the tool could help social workers by easing administrative burdens. They believed using AI to cut down on bureaucratic inefficiencies could also help attract new people to the profession. However, they also felt that an AI tool alone was not enough to address larger, systemic issues within social care.

- A key condition for using Magic Notes was obtaining informed consent and ensuring its appropriateness for each case. While this is already standard local authority protocol, people wanted strong assurance that social workers would have robust fallback mechanisms and clear guidance for cases where consent is not granted. They also had reservations about the tool being used during meetings with young people or victims of abuse.

- The most concerning risks for Magic Notes were inaccuracy, caused by transcription errors or complacency from social workers. People stressed the importance of social workers adding context and emotional nuance to summaries and correcting transcription mistakes. However, they worried that, over time, social workers might do this less due to complacency.

- 89% of people agreed that human oversight is a necessary safeguard for the responsible use of Magic Notes. People were concerned about social workers potentially becoming too reliant on the tool and not checking summaries. They wanted local authorities to establish robust oversight mechanisms, including spot checks and quality assurance reviews by managers.

- Participants emphasised the importance of involving both social workers and the people receiving care in assessing AI tools. When weighing the benefits and risks, a key factor was the results of pilot studies, which showed 93% of social workers valued Magic Notes and wanted to keep using it. Participants also thought it was vital to involve service users in evaluating these AI tools.

- The deliberation process helped uncover differences between social care service users and the general public. Although polling responses showed no statistically significant differences, the discussions revealed some notable variations. For example, groups with current service users were more likely to raise concerns about the accuracy of current manual note-taking and offered more varied suggestions for mitigating long-term risks. Specifically, they wanted to be involved in testing and reviewing summaries from Magic Notes to ensure accuracy.

- People value having a say as part of the AI Social Readiness Assessment, but they want public AI assurance to take place before widespread deployment. Although people saw the Social Readiness Assessment as a good way to involve the public in decisions about public sector AI, some questioned whether it should have taken place even sooner, before Magic Notes was piloted by over 100 councils.

What next?

Our ambition is for the AI Social Readiness process and label to become a gold standard for bringing in the voice of the public when assessing whether new AI tools are ready and acceptable for use in public services.

We’ll publish more results summarising what we’ve learned across both pilots in the coming weeks, including the impact of social assurance on public confidence in public sector use of AI.

If you have an AI tool intended for use, or being used, in UK public services and you would like it to go through the AI Social Readiness Advisory process or explore other ways for engaging the public in AI development and oversight, please get in touch by emailing [email protected].

Our resources

The full AI Social Readiness Assessment of the AI tool Magic Notes.

Read the example label for Beam's AI tool, Magic Notes.

Results from sessions with social care service users – for the AI tool ‘Magic Notes’:

[1] We polled 2,050 UK adults weighted to nationally representative criteria. Fieldwork took place 19-21 November 2025.