AI has the potential to enhance many vital public services, from health and social care, to education – but when it comes to public trust, there’s a problem. Only 26% of the UK public think adoption of AI could make public services better.

“Many organisations recognise that, to unlock the potential of AI systems, they will need to secure public trust and acceptance. This will require … that human values and ethical considerations are built-in throughout the AI development lifecycle.”

DSIT, Introduction to AI Assurance

Public agencies recognise the importance of addressing public concerns but they often lack the skills and resources to bring the public into decision-making about how AI is used.

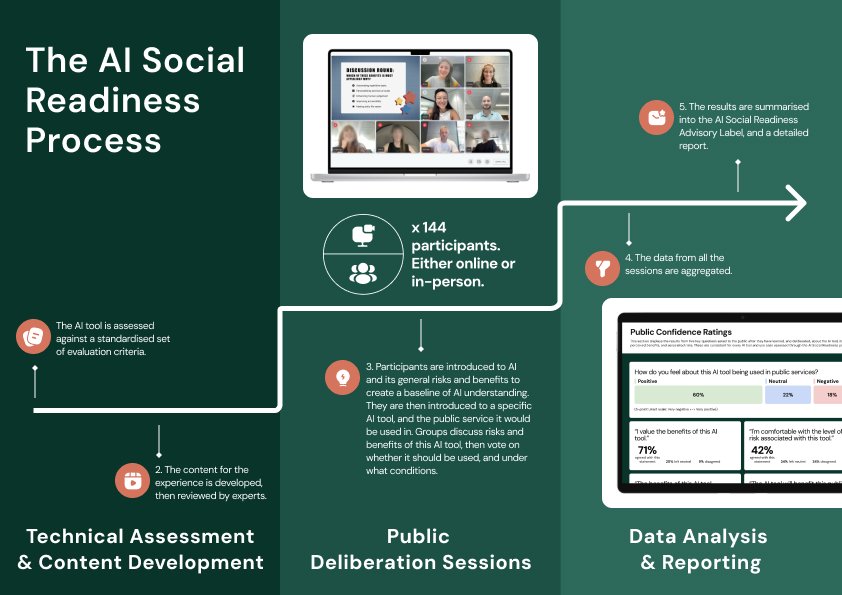

The AI Social Readiness process is a new assurance methodology developed by the Centre for Collective Intelligence that asks the public what they think about using specific AI tools in UK public services and summarises their views into a clear, easy-to-understand AI Social Readiness Advisory Label.

The label aims to fill an important gap in the AI assurance landscape. It measures public confidence and trust in specific AI tools being used in UK public services, and provides easy-to-understand advice on how to address public concerns.

It has been designed to support public sector leaders as they make decisions about AI procurement, deployment and risk management. It should be used alongside other information including technical evaluations and compliance processes.

In May-June 2025, we completed the very first AI Social Readiness process. Over two weeks, 144 people from across the UK took part in 18 public deliberation workshops – half held online, half in-person in Manchester, Newcastle and London. In our first pilot of the process, the public assessed a tool called “Consult” that uses AI to analyse public consultation responses.

People taking part in the AI Social Readiness process for the Consult tool, developed by the Government’s Incubator for AI.

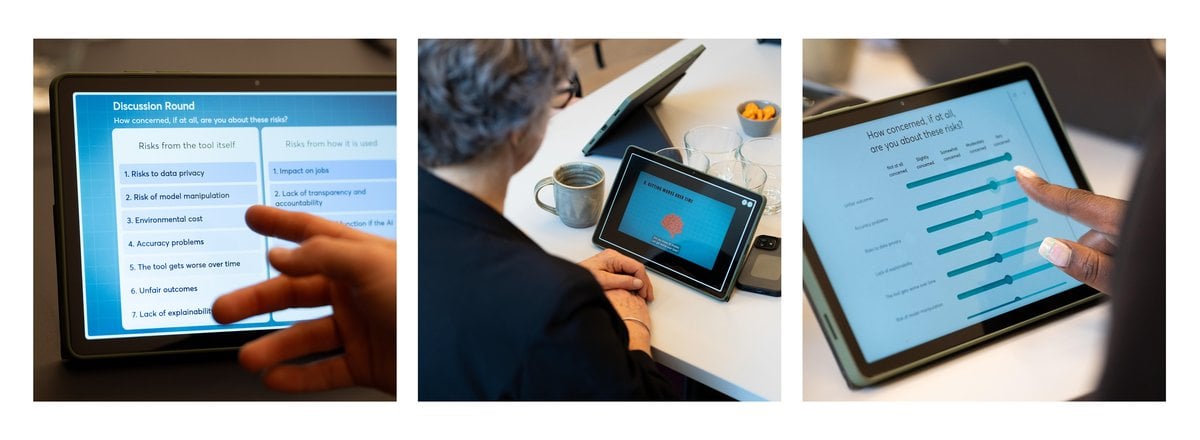

The AI Social Readiness process is immersive, educational and collaborative. During the experience, each of the 18 groups undertakes a “mission” as part of a “Public AI Task Force”. Guided by expert facilitators, the groups work through a structured format to review short videos and discuss and express opinions about the use of an AI tool in public services.

The videos break down complex topics into easy-to-understand information so ordinary people from a variety of backgrounds can have informed, balanced discussions on the potential risks and benefits of a specific tool, and articulate any specific recommendations or conditions for its use.

The first video shown in the AI Social Readiness public deliberation workshop

The experience is split into five sections. Each section contains a combination of videos, polls and group deliberation.

The Mission Deep Dive and The Dilemmas sections of the AI Social Readiness process contain tool-specific videos. All other content, including the videos in The Briefing and The Groundwork sections (eg, What is AI?) remain unchanged for each AI tool that goes through the process.

An overview of the process for creating the AI Social Readiness Advisory Label.

Once a tool has been chosen to put through the AI Social Readiness process, we carry out a technical assessment using a standardised list of risks drawing on existing AI risk frameworks. We work with an independent AI expert who reviews information taken from sources like pilot evaluations or the Algorithmic Transparency Recording Standard. We use the assessment to identify the information that needs to be included in the videos for the public to consider. These are then checked for accuracy and balance.

The AI Social Readiness process isn’t just a survey or a focus group. It’s a chance for people to really shape how AI is used in public services. The sessions are delivered through the Centre for Collective Intelligence’s digital platform Zeitgeist, using an approach known as deliberative polling, which captures both quantitative and qualitative data from individuals and groups as they learn about the tool and weigh up the benefits versus the risks. In person, people sit around a table as a group and use tablets to review videos and record their responses. Online, they connect to a video-calling version of Zeitgeist, which hosts the same video content and polls.

We know that people’s opinions on AI are context-specific. Instead of asking high level questions about attitudes, our process zooms in on specific AI tools, in real settings, and asks questions like: “With everything you’ve heard about the benefits and risks, on balance, how do you feel about this tool being used?” “Which safety measures do you think are the most important for deployment?”.

After all sessions have taken place, we combine the data to understand how the UK public feel on balance. Apart from analysing the polls which capture overall public confidence, the most concerning risks and the most important safeguards, we dig into what people said during the group discussions. This allows us to understand why groups made certain choices. It also forms the basis of recommendations for tool developers and organisations planning to use the tool, helping them to address public concerns sooner rather than later. The main findings are captured on the AI Social Readiness Advisory Label, but we also publish an accompanying report which contains a response from the tool developers about the findings and any actions they’re planning to take.

Our ambition is for the AI Social Readiness Advisory Label to become a gold standard for bringing in the voice of the public in assessing whether new AI tools are ready and acceptable for use in public services.

If you're developing an AI tool and are interested in exploring a public deliberation process like the one we used – or if you’re interested in collaborating more broadly – we’d love to hear from you. Get in touch by emailing [email protected].